Combatting misinformation needs to be job one for digital platforms. Some are making progress. Others are lagging behind the technological innovations that facilitate the spread of misinformation. I selected these two digital platforms to pursue two different lines of inquiry. I wanted to learn about a company I was less familiar with to avoid bringing my bias or personal experience to bear, so I chose LinkedIn. I also wanted to take a look at Twitter’s efforts since that is my platform of choice.

FULL DISCLOSURE: I have a LinkedIn profile, but I haven’t ever been active there.

A career-oriented social media platform and the professional community that thrives there would not be generally thought of as a hotbed or target for misinformation, aside from the occasional too-good-to-be-true business opportunity or self-help regimen. However, LinkedIn fights the same battles against misinformation as the other more prominent social media platforms and a set of unique challenges that are part of the company’s unique business model and user base.

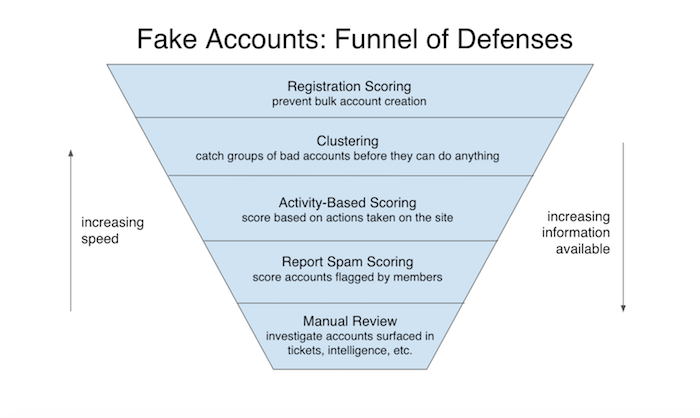

All the significant online communities share a problem: fake accounts and LinkedIn is no exception. The company reported that in the first half of 2019, more than 20 million fake profiles were either taken down or prevented from registering at the onset. Notably, 98% of those fraudulent accounts were taken down or blocked by Artificial Intelligence and machine learning.

Another area where LinkedIn can be vulnerable to exploitation, as discussed in this Newsweek article, is data harvesting by foreign intelligence agencies. LinkedIn users are professionals seeking employment or networking opportunities, readily sharing their work experience and background details. Members of the community also participate in industry-specific forums. Some users hold positions in their organizations or are current or former members of the national security apparatus. The site is a treasure trove for bad actors who can weaponize data to sow distrust, dissent, or dispute the achievements of others.

LinkedIn has committed to combatting misinformation. Their professional community policies are easy to follow and give lots of do’s and don’ts, counting on the users to police themselves and show respect toward others. They’ve also produced brief informational videos that provide an overview of guidelines and best practices.

Twitter has created an innovative approach to fight misinformation that sounds like a military operation, Birdwatch. Here users will sign up to be contributors (some might call them informants), and they will be empowered to target questionable content and attach notes that in order to provide other readers with more context. It is designed to function as a collaborative fact-checking system. Currently available on a limited basis, the pilot program launched in the second half of 2021. A related program allows users to report and classify suspected misinformation, and this feature is recently expanded into new markets worldwide.

According to the Birdwatch Guide webpage, Twitter is well aware that people will try and game the system if they can. It is important that Twitter is establishing eligibility criteria for participants so that all users will know that “Birdwatchers” have been vetted and trained to be the hall monitors on Twitter. But there is a disconnect. Why are these digital platforms, huge for-profit companies relying on the kindness and selflessness of civic-minded individuals to mind the store? Why are they setting up “neighborhood watches” with good neighbors when bad actors often have motives, resources, and technical know-how to evade well-meaning but ill-equipped civilians.

Both platforms employ AI and machine learning to do the vast majority of the misinformation detection. They also rely heavily on engaged users serving as the eyes and ears on the front lines and alerting the companies to any potential violations. Given the rapid advancements in technology used to create and disseminate misinformation, I am not sure how sustainable that solution is.

This week, I watched the 6th installment of Star Wars: The Book of Boba Fett. In this remarkable episode, the world was treated to a de-aged and deepfaked Mark Hamill as Luke Skywalker acting alongside the other characters. He was brought to life by a renowned deepfake artist and, while not perfect, gave a glimpse of what is rapidly becoming commonplace. Digital platforms need to make a long-term commitment to proactive strategies that will identify both the content that can be classified as misinformation and those individuals, organizations and networks that are helping it to spread. As deepfakes and other forms of manipulation continue to improve, we can only hope that the means for detecting and labeling manipulated content will keep pace.